How to Protect Your Data While Using AI Chatbots

Step-by-step instructions how to opt out of data sharing with AI chatbots, including ChatGPT, Claude, Microsoft Copilot, and others.

The major AI models have shown the world impressive capabilities, from writing Shakespearean-style sonnets to creating hardcore data analysis. But with great power comes great data-sucking demands. All of the major large language models (LLMs), including ChatGPT, Claude.ai, Google’s Gemini, X’s Grok, Microsoft’s Copilot and Meta.ai, rely on petabytes of training data, some of which comes from users like you.

Whenever you prompt ChatGPT to sing you a song or tell Gemini to clean up some text, you’re helping their parent companies train the foundation models that power them. For many people, perhaps most, this is not a problem. However, for others — including investigative journalists, human rights advocates, corporations, and lawyers — using these LLMs would mean adding their proprietary data to the great mass of training materials for these AIs.

In a paper published last year, researchers spent $200 worth of LLM tokens to extract personally identifiable information from AI chatbots, including names, emails, phone numbers, URLs, code snippets, Bitcoin wallet addresses and even NSFW material. They note that anyone with more than $200 to spend could extract much more data.

It’s important to remember that these companies have already scraped much of the web, so every tweet you ever sent, every cringey Instagram post and every political screed on Facebook is already in the training data sets. There’s not much you can do about that. However, when using these models, you should be aware of the risks of inputting personal or sensitive information.

There’s no reason to panic, however. All major LLM models are aware of this problem and have taken steps to protect the data. When a chatbot “regurgitates” training data verbatim, it’s a bug, not a feature, and it’s much harder to do today than, say, a year ago. but there is always a chance (albeit a small one) that malicious actors could gain access to the training data either through creative prompting or some other means.

Read on to learn how to protect your data when working with LLMs. It’s easier than you think (except in one case).

How to Protect Your Data in ChatGPT

As the first LLM to gain public notice, OpenAI’s ChatGPT is probably the most used of the major AI models. That means many people are helping train it through their daily prompts and queries. If you don’t wish to have your data used for training, follow these steps:

As a logged-in user, go to Account in the upper right corner:

Click on Settings, and then click on Data Controls:

Change the “Improve the model for everyone” setting to “off.”

These also apply to the Mac desktop app and the iOS app.

How to Protect Your Data in Claude.ai

Anthropic’s Claude says it doesn’t use user inputs to train its models unless you explicitly tell it to use one of the outputs by clicking one of the thumbs-up/down feedback buttons on responses, found at the bottom of the chats, like so:

So you don’t need to do anything to protect your data with Claude.ai. Don’t click either thumb if you don’t wish your data to be used for training. Anthropic does reserve the right to review your inputs when “your conversations are flagged for Trust & Safety review (in which case we may use or analyze them to improve our ability to detect and enforce our Usage Policy, including training models for use by our Trust and Safety team, consistent with Anthropic’s safety mission).”

How to Protect Your Data in Google Gemini

Google says humans… er, people may review its Gemini chats to improve the AI, but if you want to opt out of that, here’s what to do:

Log into Gemini and then choose “Activity” in the lower left sidebar.

Select the “Turn Off” dropdown and choose to deactivate Gemini Apps Activity or opt-out and delete chat data.

Just remember that this only applies to future chats; data you’ve already contributed isn’t erased. According to Google’s Gemini privacy policy, any conversations that Google has already reviewed may be kept for up to three years.

How to Protect Your Data in X’s Grok

Grok-2, the AI model from Elon Musk’s X.ai, has fewer protections and guardrails than other AI models. Indeed, it’s described as having “a twist of humor and a dash of rebellion.” I guess it’s funny and rebellious that members of X.com are automatically dragooned in to use their activity to train Grok, whether they use the AI or not.

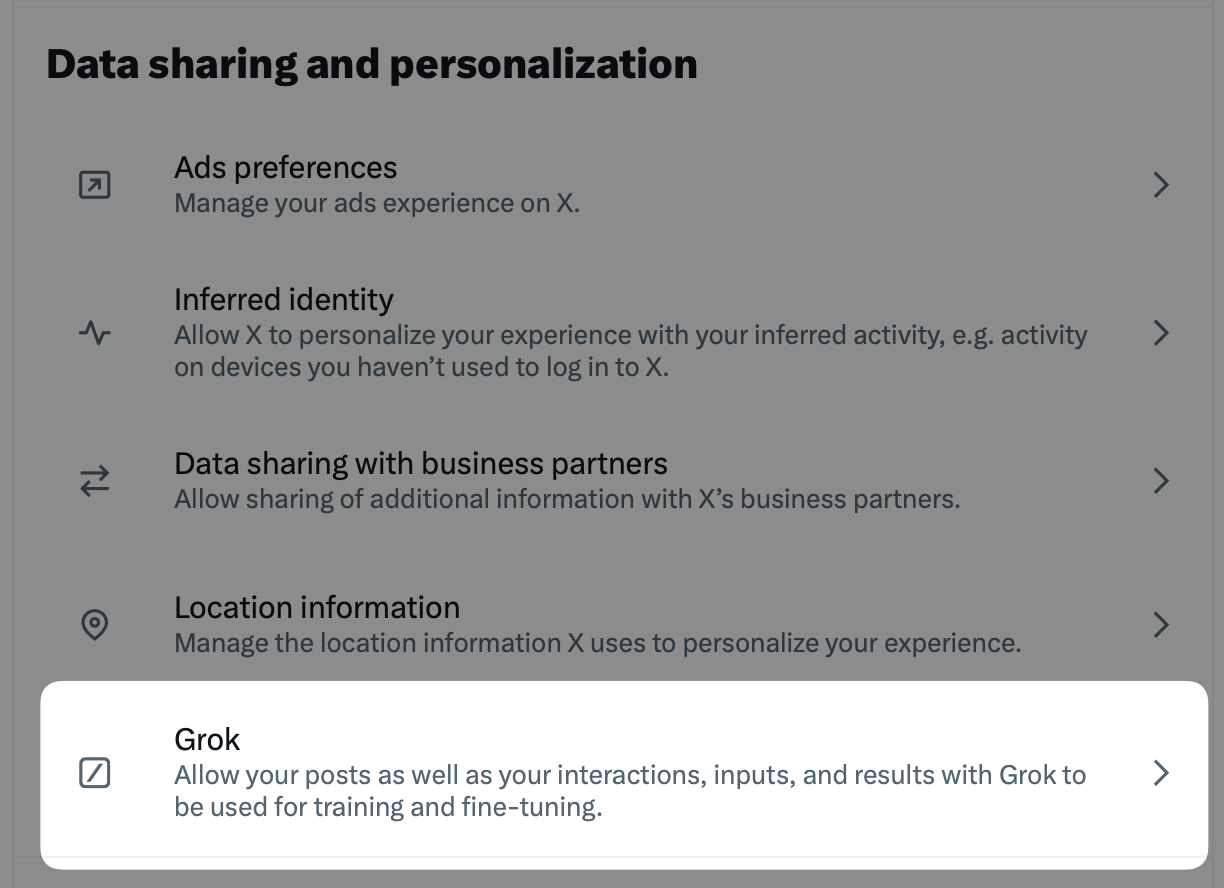

But you can opt out by logging into X, clicking the “More” three-dot button on the left sidebar, and then drilling down into SettingPrivacy > Privacy and Safety > Grok (under “Data sharing and personalization”).

From there, you can opt out of the data sharing and delete the conversations you might have had with Grok.

How to Protect Your Data in Microsoft Copilot

Microsoft is a partner of OpenAI, so its Copilot products use a version of ChatGPT. Its privacy page says that data from commercial customers or users logged into an “organizational M365/EntraID account” (in other words, work accounts) are not used to train the models, nor do they train on data from M365 personal or family subscriptions.

Microsoft has recently introduced its privacy settings to fiddle with, but they are a little hidden.

Once signed in, click on your profile picture in the top right corner. Click on your email address and name in the first box to see this window. Click on “Privacy.”

This will open a window where you can turn off model training and personalization and export or delete your chat history.

Microsoft has an in-depth privacy page that explains in detail how training data is used and from whom it's collected.

How to Protect Your Data in Meta.ai

Saving the worst for last: Meta is a hungry, hungry hippo for data. Opting out of having your personal data on Facebook, Instagram, Threads or WhatsApp is a convoluted and non-obvious process. To make matters worse, Meta doesn’t even guarantee they’ll opt you out, only that it will “review objection requests in accordance with relevant data protection laws.”

The United States doesn’t have many “relevant data protection laws,” so your chats with Meta.ai will absolutely be used to train their LLM model, and there’s nothing Americans can do about that. There is no opt-out option. Meta is also free to use public posts and comments on Facebook and Instagram to train their LLMs. This has not pleased many social media users.

In the U.S., the best way to stop Meta from using your data to train is to set all your posts and comments on Facebook to something other than Public. You’ll find these settings buried in your account settings available (on the web) in the upper right corner of Facebook. Select Settings & Privacy > Settings > Default audience settings on the right sidebar.

For Instagram, you’ll enter this privacy maze on the left sidebar on Instagram (again on the web) under More > Settings > Account Privacy.

In both cases, this is an all-or-nothing setting. Facebook asks you to set your privacy settings to Public, Friends, or Custom, which involves a great deal of fiddling with toggle switches. Instagram is just Public or Private.

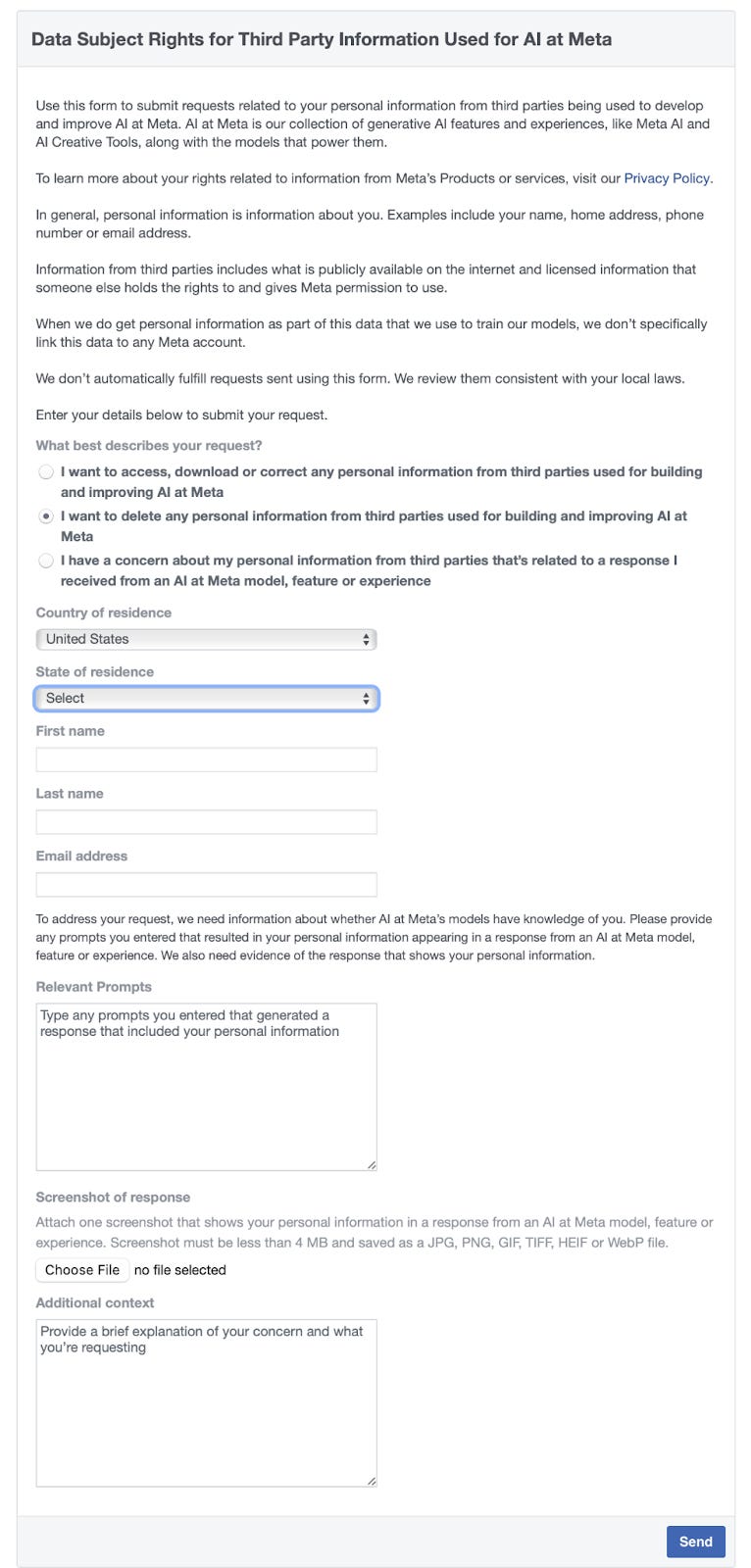

Alternatively, you can try to fill out the “Data Subject Rights for Third Party Information Used for AI at Meta” form. I found that link buried in an article on Spinterra.com, so it’s not like Meta is making this easy.

Go to that link and click the middle choice, “I want to delete my personal information…” Fill out the form, and you’ll have to provide examples of how Meta.ai knows you. This is a high bar for regular folks to clear, and it only applies to third-party data, not anything from Zuck’s gaping maw of data-sucking.

Things are a little better if you’re in the EU and the United Kingdom, thanks to more robust privacy laws. I don’t have an EU Facebook account, so I can’t demonstrate the following steps, but according to MIT Technology Review, you should do the following:

For Facebook accounts

Log into your account and access the new privacy policy here. At the top of the page there should be a box that says “Learn more about your right to object.” Click that link.

Or, click on your account icon in the top right corner and then select Settings and Privacy > Privacy Center and look for a dropdown menu in the left sidebar that says “How Meta uses information for generative AI models and features.” Click that and scroll down to “Right to Object.”

Fill in the form with your information (ironic, no?) You will have to justify the opting out by explaining how Meta’s data processing affects you. You will probably have to confirm your email address.

You’ll eventually get an email and a Facebook notification that your request has been successful. The MIT Tech Review author said she got it within a few minutes.

For Instagram accounts

Log into your account and go to your profile page. Click on the three lines in the top-right corner.

Look for “More info and support” and click “About.” Then click — again! — on “Privacy Policy” and then look for the “Learn more about your right to object” link.

Follow the steps for Facebook from there.